How the next generation of professionals will think, adapt, and grow alongside AI — not behind it.

The Age of Collaboration, Not Fully Automation

Human—machine collaboration is one of the sexiest topics in artificial intelligence. However, people are obsessed with making AI smarter and achieving new records on the leaderboards, so that most conversations about AI I have heard today still orbit around a single question: “Will AI replace us?” — maybe because of the viral nature of The Terminator series — but not “how we can co-exist with AI”.

An interesting research study from 2025, conducted by Northeastern University and UCL, titled “Quantifying Human–AI Synergy”, reframes the question entirely.

Instead of asking whether AI outperforms humans, it asks:

“How much smarter can we become when we work together?”

I am excited about the number they provided. On average, humans perform 20–30% better when collaborating with advanced language models, such as GPT-4 (OpenAI) or Llama-3.1–8B (Meta).

What the scientists found

For decades, research in human-machine collaboration has used benchmarks such as MMLU or GSM8K to measure AI’s intelligence in isolation — static prompts and fixed answers.

Yet, human work is dynamic. It occurs through conversation, interaction among people, and adaptation to changes in context.

Riedl and Weidmann introduced a Bayesian framework that finally measures interactional intelligence:

The synergy between a person’s individual ability and their collaborative ability with AI.

Their finding is simple but profound: Working well with AI is a skill that leverages the performance in comparison to humans working alone or fully automating work with AI.

This research also identifies who will benefit the most from working in pairs with AI.

Last but not least, which is one of the most important skills that humans need to build to collaborate effectively with large language models (LLMs) in the AI era.

Collaboration is a skill — and a learnable one

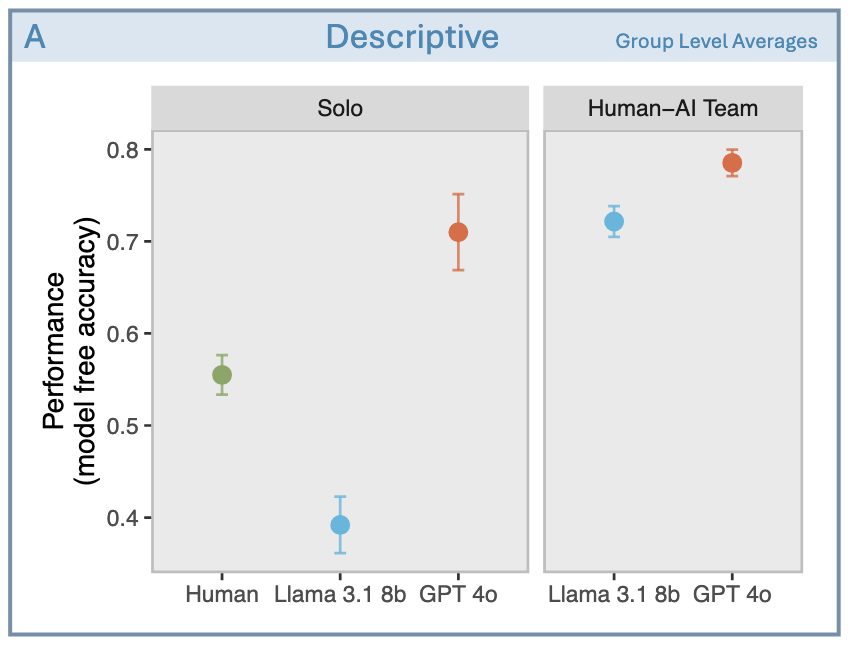

The authors conducted experiments on more than 600 people with human alone, ChatGPT 4.0, and Llama-3.1–8B. They conduct the test on some fields, including math, physics, and moral reasoning.

From the figure above (source: Paper), we know that GPT-4 outperforms human solo and Llama 3.1 8b solo, but not as good as a collaboration between human and AI, even though human-only works in pairs with Llama 3.1 8b.

The study also reveals that some users consistently outperform others with AI, even when their solo performance is average.

Why? Because they have higher collaborative ability — the talent for using AI as a thinking partner.

Honestly, teamwork is the most critical skill in this era. The proof is that it’s rare to find any research project or achievement today that doesn’t come from the collaboration of many people.

And with AI? Yes, collaboration is also essential, and it’s the key to sparking. The proof is that many companies with solo members can become unicorns by optimizing the power of AI.

Well, this ability depends on a cognitive trait psychologists call Theory of Mind (ToM) — the capacity to infer what another agent (human or machine) deeply understands about other people’s minds.

The paper defines “Theory of Mind” (ToM) as the capacity to represent and reason about others’ mental states, such as their beliefs, goals, or intentions. It is crucial for explaining and predicting behavior in human-human interaction, enabling coordinated behaviour by allowing individuals to anticipate others’ actions and knowledge, establish common ground, disambiguate and repair communication, and adapt their contributions during joint tasks.

In AI terms, ToM helps people predict what a model will do, where it might fail, and how to guide AI toward the target.

People with strong ToM don’t just use AI — they coach it.

What Humans Must Learn, or guess who could be the most successful people in the AI era?

1. Understand how AI thinks — and where it doesn’t

Language models, in a nutshell, don’t know; they predict, even though many people claim they’re able to think. They simulate understanding based on data patterns, not experience. To collaborate effectively, humans must form accurate mental models of AI behaviour — understanding its strengths, limitations, and areas of uncertainty.

When you can anticipate how AI might misinterpret your question, you’ve already begun to think synergistically.

In this case, an AI developer with technical insight has a better position in the AI race. However, updating the research in AI Ethics, AI evaluation methods, and cultural bias in AI is in high demand.

2. Talk with AI, not give it commands

Prompting isn’t a one-shot command — it’s a conversation. Great collaborators use iterative prompting:

Ask → Receive → Reflect → Refine → Re-ask.

Each cycle builds shared context — just like good teamwork. It’s not about manipulating syntax; it’s about maintaining a dialogue that evolves toward clarity.

Some years ago, I joined the group research of Professor Justine Cassell, the Director Emerita of the Human-Computer Interaction Institute, also in the School of Computer Science at CMU(*). I found a pool of research related to making the conversation between humans and machines effective, including topics such as speed of response in chat, practical discourse, and the use of puns in conversation, among others. AI can touch the level of linguistic professionalism after decades of research.

(*)Actually, after some months, I realised that I couldn’t understand much, and then I followed my true destination — my wife.

3. Develop metacognition — awareness of your own thinking

The best human–AI teams share one habit: reflection.

They question not only the model’s output but also their own reasoning:

- Why do I trust this result?

- What assumption am I making?

- What bias might the model — or I — be bringing in?

This reflective loop is the foundation of adaptive collaboration. This is a good way to avoid the same critical mistake made by Deloitte.

If any suggestions, please practice daily

1. Train Your Theory of Mind

When AI answers, ask: Why did it respond this way?

Simulate its reasoning process. You’re not anthropomorphising — you’re calibrating your cognitive radar.

2. Iterate in Micro-loops

Treat every session as a team effort: clarify, challenge, and confirm.

The more feedback cycles you create, the more your synergy coefficient improves.

3. Keep an AI Collaboration Log

After each task, note what worked, what failed, and what surprised you. Over time, you’ll build your own “interaction dataset” — a personal map of AI behavior patterns.

4. Check every source that AI cites in the result

Do not trust every citation made by AI, even though the title is so meaningful. Click and check every link to the source, and open it to see if the source is reliable or not. From my own experience, GitHub and link-to-paper are the most hallucinated citation that ChatGPT often create.

5. Sometimes, accepting the creativity

Here is one example I have practised conversation with ChatGPT to know about Radix Attention, a technique to optimise inference for LLMs. Very long conversation before I realised that ChatGPT was trying to propose new things to me. Checking the methods in the paper, it’s totally different, but the method presented by ChatGPT is reasonable, too. Yet, so far, I cannot verify whether this idea came from an existing paper or not. I must accept that ChatGPT created it.

Final Thought

Human–AI collaboration is a must. The most valuable professionals of the next decade won’t be those who merely know how to use AI, but those who know how to partner with it.

Because the real question is not: Can AI do my job? It should be: How can we, together, create the future world?